Hi @Barton. Your question spans several dimensions. This is the way I like to look at things:

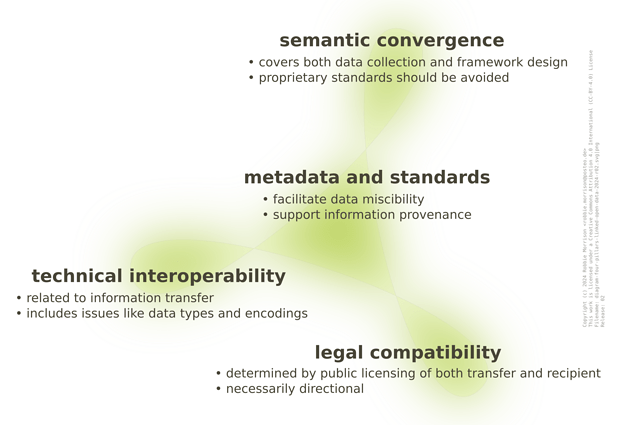

This diagram covers only information that is not encumbered by personal or commercial privacy concerns — as derived from human rights and intellectual property considerations, respectively.

And for clarity, this diagram also excludes content of the type used to train large language models (LLM). Nowadays, things like the complete works of George Orwell are routinely described as AI data. Unlike the numerical analysis data considered here, this AI content is routinely under copyright and perhaps other intellectual property rights.

As the diagram suggests, metadata and standards are the glue that hold this entire enterprise together. Standardization can cover all the aspects shown in the diagram and may range from consensus‑based to formally constituted. Proprietary standards should be avoided.

Linked open data (LOD) is certainly a great design concept. Those who are best placed to generate and maintain specialized datasets do so. Primary data collection is not routinely duplicated. Workflows can be automated and repurposed to good effect. Communications upstream are facilitated. Data users have incentives to contribute back on multiple levels. Information integrity is facilitated.

Issues covering legal compatibility and open licensing are material. And there can be quite some gulf between the open science rhetoric from major research institutions and the inadequate public licensing that they provide in practice. This rift is particularly problematic in Europe where the Database Directive 96/9/EC applies automatically. More here on the necessary legal basis for a knowledge commons.

In terms of semantic alignment, I think the Open Energy Ontology (OEO) provides an excellent foundation for data semantics (noting that I am on the steering committee). You allude to the use case of deploying a single data repository to service the data needs of multiple framework applications (models), statistical methods (analysis), and perhaps even machine learning (AI) projects.

I know of one energy/climate think‑tank that uses well‑known energy system frameworks and recently tried to populate their various models from the one in‑house databank (sorry that I cannot provide names). They looked at the OEO but opted for their own simpler in‑house data model derived from a rough superset of the frameworks they utilize. This process also demonstrates that data semantics apply equally to software design and data collection. In the end, I believe their efforts were successful but are unfortunately limited to in‑house usage at this juncture. Incidentally, I attempted something perhaps similar back in 2002 crossing the TOP‑Energy (previously EUSEBIA) and deeco frameworks: doi:10.5281/zenodo.6619604.

The question of technical interoperability between data nodes is not as challenging, I think. A core issue is the satisfactory retention and processing of metadata and the creation and inclusion of new metadata. Note also that RLI are developing a metadata standard for energy modeling.

Returning to your question about data platforms. And I try to be as impartial as possible about different initiatives. The Open Energy Platform (OEP) from the Reiner Lemoine Institute (RLI) is probably your best first point of call. The OEP was designed from the outset to support LOD and community curation and specifically intended for systems modeling. I suppose Wikidata could be another option, but I imagine it would take a major effort to develop the necessary semantic architecture.

The Wikipedia page on open energy system databases is probably worth a look.

I would like to credit much of development work at RLI to the vision of @ludwig.huelk. There are also some YouTubes by him on the openmod channel (see URL at the bottom of this page) that may be of interest.

Some closing remarks. I think the push for a knowledge commons based on linked open data under appropriate public licensing is receding. Similarly , the expectations surrounding the processing of big data for social benefit have not materialized to any extent. And the European Commission is pushing for data to be treated as an economic good in its own right and retreating from the ideal of genuinely open information serving public interests (but the Commission are now perversely moving in exactly the opposite direction for source code and software).

A mention of data brokerage is also relevant. These services act as intermediaries and some run on open source software. But they are necessarily focused on closed data and bilateral agreements. And they normally regard open data licenses (such as CC‑BY‑4.0) as just another type of legal instrument. I disagree with that view. The principle characteristic of a knowledge commons is social — and data brokerage does not naturally offer support for broad communities and common property (that foundational difference is widely recognized for proprietary versus open source software but not so for non‑open versus open data).

I guess a knowledge commons can be semi‑private — in the sense of being made up of partners who agree to share but not publish. I’ve not come across this halfway house much in practice, but I imagine there are examples.

Finally, synthetic data, whether conventional or generated by AI, is often presented as a way to address matters of personal and commercial privacy. In the case of AI methods, there is a tacit assumption that the trained data cannot reveal household or facilities-specific information and that the underlying training data cannot be approximately regurgitated. See Chai and Chadney (2024) for a recent example. My concern is that synthetic data in general does not yet capture important correlations relative to external contexts (such as real‑time weather) and may provide spurious conclusions when used to study complex systems.

The reason I have covered so much territory is that the FAIR data concept requires policy on most of the issues raised in this post. Good luck with your projects and explorations, R.